Introduction

In the ever-evolving world of digital content, the difference between being seen and being overlooked often hinges on subtle but powerful tools like robots meta tags. These HTML elements act as instructions for search engine crawlers, dictating which pages should be indexed, how they should be crawled, and what information can appear in search results.

For website owners, SEO professionals, and developers, mastering robots meta tags is essential for optimizing visibility, managing crawl budgets, and ensuring that only relevant content appears in search results. Whether you’re running a small blog or a large e-commerce site, understanding how to use these tags effectively can significantly impact your site’s performance in search engines like Google, Bing, and Yandex.

This article will guide you through the fundamentals of robots meta tags, their various directives, and best practices for implementation. You’ll also learn how to integrate them with other SEO tools and avoid common pitfalls that could undermine your efforts.

What Is Robots Meta Tags and Why It Matters

Robots meta tags are HTML elements placed in the <head> section of a webpage. They serve as a communication channel between your website and search engine crawlers, instructing them on how to handle specific pages.

The primary purpose of these tags is to control page indexing and crawling behavior. By using directives like noindex, nofollow, or noarchive, you can tell search engines whether to include a page in their index, follow its links, or show cached versions.

While it might seem like a minor technical detail, the impact of these tags on your site’s SEO is significant. For example, using noindex on duplicate or low-value pages can prevent them from appearing in search results, reducing the risk of penalties from search engines. Similarly, nofollow can help manage link equity by preventing crawlers from passing authority to untrusted or irrelevant links.

These tags are particularly useful when managing large websites with thousands of pages, where manual oversight is impractical. Proper configuration ensures that search engines focus on the most important content, improving both user experience and search rankings.

How Robots Meta Tags Impact SEO Performance

Robots meta tags directly influence several key aspects of SEO:

1. Indexation Control

By using noindex, you can prevent specific pages from appearing in search results. This is especially useful for:

– Admin or login pages

– Thank-you pages

– Duplicate content

– Pages under development

2. Crawl Budget Optimization

Search engines have limited resources for crawling your site. By directing crawlers away from less important pages, you ensure that more valuable content gets indexed faster.

3. Snippet Management

Directives like nosnippet and max-snippet allow you to control how your content appears in search results. This helps maintain brand consistency and prevents sensitive or outdated content from being displayed.

4. Link Equity Distribution

Using nofollow on external or untrusted links prevents search engines from passing link authority to those pages. This helps preserve your site’s credibility and avoids potential spam issues.

5. Content Privacy

Tags like noarchive prevent search engines from showing cached versions of your pages, protecting sensitive or time-sensitive content.

By strategically applying these tags, you can improve your site’s SEO performance, reduce the risk of penalties, and ensure that only the most relevant content is visible to users.

Step-by-Step Implementation Framework

1. Define or Audit the Current Situation

Before implementing robots meta tags, conduct a thorough audit of your website. Identify pages that should not be indexed (e.g., admin areas, thank-you pages) and determine which directives to apply.

Use tools like Google Search Console, Screaming Frog, or Ahrefs to analyze your site’s structure and identify any existing conflicts or misconfigurations.

2. Apply Tools, Methods, or Tactics

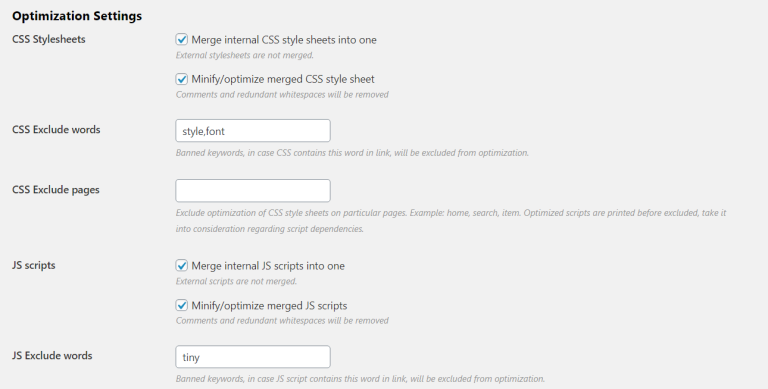

Depending on your platform, there are multiple ways to implement robots meta tags:

WordPress Users

Use plugins like Yoast SEO or RankMath to easily manage indexing directives. These tools provide intuitive interfaces for setting noindex or nofollow on specific pages.

Manual HTML Editing

For custom sites, edit the <head> section of your HTML files. Add the following code for a basic noindex directive:

<meta name="robots" content="noindex">

You can also combine directives, such as noindex, nofollow.

X-Robots-Tag for Non-HTML Files

If you’re managing non-HTML content (like PDFs or images), use the X-Robots-Tag via HTTP headers. On an Apache server, this can be done in the .htaccess file:

<Files "example.pdf">

Header set X-Robots-Tag "noindex"

</Files>

On Nginx, use the add_header directive in your server block.

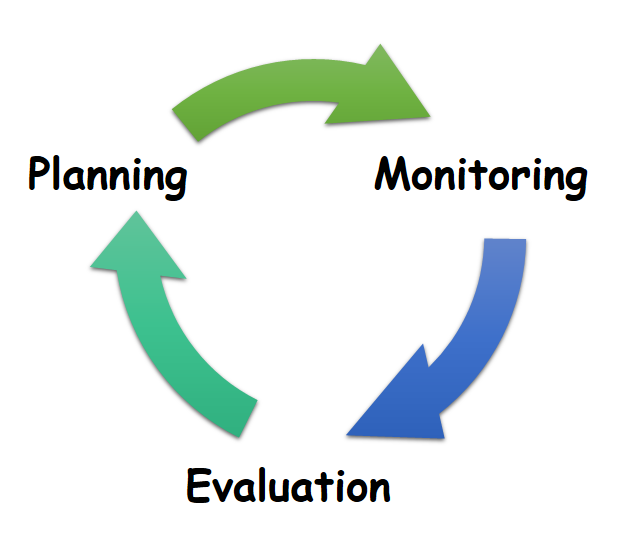

3. Measure, Analyze, and Optimize

After implementation, monitor your site’s performance using tools like Google Search Console and Bing Webmaster Tools. Check for crawl errors, indexing status, and changes in traffic.

Use A/B testing to compare different configurations and determine what works best for your audience. Regularly update your directives as your site evolves.

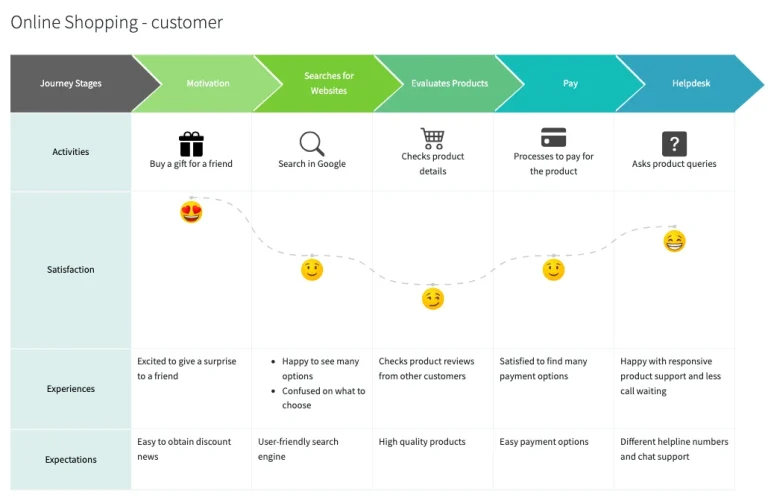

Real or Hypothetical Case Study

Consider a hypothetical e-commerce site that sells seasonal products. The site has thousands of product pages, many of which are duplicates or outdated. Without proper management, search engines may index irrelevant or low-quality pages, harming the site’s overall SEO performance.

By implementing noindex on duplicate product variations and nofollow on internal navigation links, the site reduces the number of low-value pages in search results. Over time, this leads to improved organic traffic, higher engagement, and better conversion rates.

Additionally, using noarchive on promotional pages ensures that users don’t see outdated deals in search results, maintaining trust and brand reputation.

Tools and Techniques for Robots Meta Tags

Here are some of the most effective tools for managing robots meta tags:

- Yoast SEO – A popular WordPress plugin that simplifies the process of adding

noindex,nofollow, and other directives. - RankMath – Another powerful SEO plugin that offers advanced settings for managing robots tags.

- Google Search Console – Use this tool to check how your site is being indexed and identify any issues with robots tags.

- Screaming Frog – A desktop crawler that helps audit your site’s robots meta tags and detect potential conflicts.

- Ahrefs – Provides insights into how your pages are indexed and allows you to track changes over time.

Each of these tools can help streamline the process of configuring and monitoring robots meta tags, ensuring that your site remains optimized for search engines.

Future Trends and AI Implications

As search engines become more sophisticated, the role of robots meta tags will continue to evolve. With the rise of AI-powered search assistants like Google’s Search Generative Experience (SGE), the way content is indexed and displayed is changing rapidly.

AI models now prioritize relevance, user intent, and content quality over traditional ranking factors. This means that even if a page is indexed, it may not appear in SGE results unless it meets certain criteria.

To stay ahead, consider the following trends:

– Semantic SEO: Focus on creating high-quality, context-rich content that aligns with user intent.

– Entity-Based Structuring: Use structured data and semantic markup to help AI models understand your content better.

– Multimodal Optimization: Ensure your content is optimized for text, images, and video, as AI systems increasingly rely on multimodal inputs.

By adapting your robots meta tag strategy to these changes, you can ensure your content remains visible and valuable in the evolving search landscape.

Key Takeaways

- Robots meta tags are essential for controlling how search engines interact with your website.

- Directives like

noindex,nofollow, andnoarchivehelp manage indexing, crawling, and snippet display. - Proper configuration improves SEO performance, reduces crawl budget waste, and protects sensitive content.

- Use tools like Yoast SEO, RankMath, and Google Search Console to simplify implementation and monitoring.

- Stay informed about future trends, including AI-driven search and multimodal optimization, to remain competitive.

By mastering robots meta tags, you gain greater control over your site’s visibility and performance in search results. Start implementing these strategies today to ensure your content is seen by the right audience at the right time.

Meta Title: Understanding Robots Meta Tags: How to Control Page Indexing and Crawling Behavior

Meta Description: Learn how to use robots meta tags to control how search engines index and crawl your website. Improve SEO, manage crawl budgets, and protect sensitive content.

SEO Tags (5): robots meta tags, SEO optimization, page indexing, crawling behavior, search engine visibility

Internal Link Suggestions:

– [Parameter #1: Search Intent Alignment]

– [Parameter #8: Content Gap Filling]

– [Parameter #12: PAA Question Targeting]

External Source Suggestions:

– Google Search Console

– Yoast SEO

– Ahrefs