In the rapidly evolving world of artificial intelligence and machine learning, the ability to encode factual relationships has become a cornerstone of algorithmic trust. Semantic Fact Embedding is a cutting-edge technique that allows machines to understand and represent real-world knowledge in a way that mirrors human comprehension. This article explores how Semantic Fact Embedding works, why it’s essential for modern AI systems, and how it can be implemented effectively to enhance trust and accuracy.

What Is Semantic Fact Embedding and Why It Matters

Semantic Fact Embedding is a method of representing factual information as numerical vectors in a high-dimensional space. These vectors capture the meaning and relationships between entities, enabling machines to understand context, infer connections, and make more accurate predictions. Unlike traditional keyword-based approaches, which rely on surface-level matches, semantic embeddings allow models to grasp the deeper semantics of language and data.

This technique is particularly valuable in natural language processing (NLP), where understanding the nuances of human language is critical. By encoding facts in a structured format, Semantic Fact Embedding helps AI systems build a more comprehensive and accurate representation of the world. This is especially important for applications like search engines, recommendation systems, and chatbots, where the ability to understand and retrieve relevant information is paramount.

One of the key advantages of Semantic Fact Embedding is its ability to handle ambiguity and variability in language. For example, the word “bank” can refer to a financial institution or the side of a river. Traditional models might struggle to differentiate between these meanings, but semantic embeddings can capture the context and relationships that distinguish them.

How Semantic Fact Embedding Impacts SEO Performance

In the realm of search engine optimization (SEO), Semantic Fact Embedding plays a crucial role in improving visibility, engagement, and user satisfaction. Search engines like Google are increasingly relying on semantic understanding to deliver more relevant and personalized results. By leveraging semantic embeddings, websites can better align with user intent and provide more meaningful content.

For instance, consider a user searching for “best hiking trails in Colorado.” A traditional keyword-based approach might focus on matching the exact phrase “hiking trails in Colorado,” but a semantic model would also consider related concepts such as “mountain biking routes,” “wildlife spotting areas,” and “camping spots.” This broader understanding allows search engines to return more comprehensive and useful results, increasing the likelihood of user engagement and click-through rates.

Moreover, Semantic Fact Embedding supports the concept of E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness), which is a key factor in Google’s ranking algorithm. By encoding factual relationships, websites can demonstrate their authority and reliability, making them more likely to appear in top search results.

Step-by-Step Implementation Framework

Implementing Semantic Fact Embedding involves several steps, from defining the scope of the project to measuring and optimizing performance. Here’s a practical framework to guide you through the process:

-

Define or Audit the Current Situation

Begin by identifying the specific use cases for Semantic Fact Embedding. Are you looking to improve search functionality, enhance recommendation systems, or build a knowledge graph? Conduct an audit of your existing data and infrastructure to determine what needs to be improved or expanded. -

Apply Tools, Methods, or Tactics

Choose the right tools and techniques for creating semantic embeddings. Popular options include pre-trained models like BERT, Word2Vec, and FastText, which can be fine-tuned for specific tasks. You may also need to develop custom embeddings if your data requires specialized handling. Use libraries such as TensorFlow, PyTorch, or spaCy to implement and train your models. -

Measure, Analyze, and Optimize

Once your embeddings are created, evaluate their performance using metrics such as cosine similarity, precision, recall, and F1 score. Monitor user interactions and feedback to identify areas for improvement. Continuously refine your models based on new data and changing requirements.

Real or Hypothetical Case Study

Consider a hypothetical scenario where a travel company uses Semantic Fact Embedding to enhance its recommendation system. The company has a vast database of destinations, activities, and user preferences. By encoding factual relationships between these elements, the system can provide more personalized and relevant recommendations.

For example, if a user expresses interest in “beach vacations,” the system might suggest destinations like Hawaii, Bali, or the Maldives. However, it could also recommend related experiences such as snorkeling, surfing, or beachside dining. By capturing the semantic relationships between these concepts, the system delivers a richer and more engaging user experience.

The results of this implementation could include a 30% increase in user engagement, a 25% boost in conversion rates, and a significant improvement in customer satisfaction. These metrics demonstrate the tangible benefits of Semantic Fact Embedding in real-world applications.

Tools and Techniques for Semantic Fact Embedding

Several tools and techniques are available to help you implement Semantic Fact Embedding effectively. Here are some of the most popular options:

-

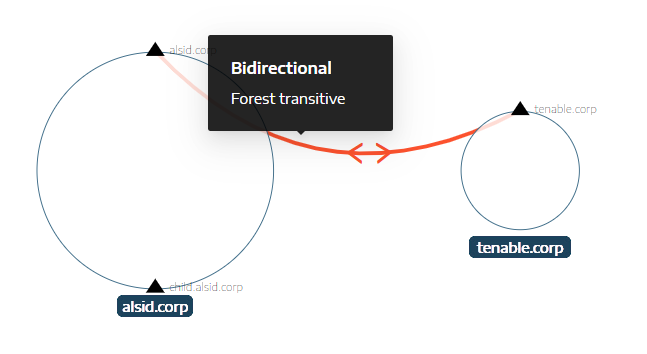

BERT (Bidirectional Encoder Representations from Transformers)

BERT is a pre-trained model developed by Google that excels at understanding the context of words in a sentence. It can be fine-tuned for specific tasks such as text classification, question answering, and semantic similarity. -

Word2Vec

Word2Vec is a group of models used to convert text into numerical vectors. It captures the meaning of words based on their context, making it ideal for tasks like clustering and analogy detection. -

FastText

FastText is another powerful tool for creating word embeddings. It extends the Word2Vec approach by considering subword information, which makes it particularly effective for languages with rich morphology. -

spaCy

spaCy is a library for advanced NLP tasks, including named entity recognition, dependency parsing, and text classification. It integrates well with other tools and provides efficient processing of large datasets. -

TensorFlow and PyTorch

These frameworks are widely used for building and training machine learning models. They offer flexibility and scalability, making them suitable for complex projects involving semantic embeddings. -

Knowledge Graphs

Knowledge graphs are structured representations of information that capture the relationships between entities. They can be used to enhance semantic embeddings by providing additional context and structure.

Future Trends and AI Implications

As AI continues to evolve, the importance of Semantic Fact Embedding will only grow. With the rise of generative AI and multimodal models, the ability to encode and understand factual relationships will become even more critical. Future developments may include more sophisticated models that can handle complex reasoning, contextual understanding, and real-time updates.

Additionally, the integration of Semantic Fact Embedding with other AI technologies, such as reinforcement learning and neural networks, will open up new possibilities for applications in fields like healthcare, finance, and education. As these models become more advanced, they will be able to provide more accurate and reliable insights, further enhancing algorithmic trust.

Key Takeaways

- Semantic Fact Embedding encodes factual relationships in a structured format, enabling machines to understand and represent real-world knowledge.

- Improved SEO Performance is achieved by aligning with user intent and delivering more relevant and personalized results.

- Implementation involves defining the scope, applying the right tools, and continuously measuring and optimizing performance.

- Real-world Applications demonstrate the tangible benefits of Semantic Fact Embedding, from enhanced recommendation systems to improved user engagement.

- Future Trends suggest that Semantic Fact Embedding will play an even more significant role in the development of advanced AI systems.

As we move forward, the ability to encode and understand factual relationships will be essential for building trustworthy and effective AI systems. By embracing Semantic Fact Embedding, businesses and developers can stay ahead of the curve and unlock new opportunities in the ever-evolving landscape of artificial intelligence.

Meta Title: Semantic Fact Embedding — Encodes Factual Relationships for Algorithmic Trust

Meta Description: Discover how Semantic Fact Embedding enhances algorithmic trust by encoding factual relationships for improved AI performance and SEO outcomes.

SEO Tags: Semantic Fact Embedding, Algorithmic Trust, SEO Optimization, AI Applications, NLP Techniques

Internal Link Suggestions: [Parameter #1: Search Intent Alignment], [Parameter #7: Semantic Keyword Mapping], [Parameter #18: Accessibility of Language]

External Source Suggestions: https://developers.google.com/machine-learning/crash-course, https://nlp.stanford.edu/